At my college graduation, our keynote speaker was expected to speak for over 90 minutes! The graduation was outside in late May and the 800 graduating students were in full military dress uniform: heavy wool jackets with high collars, long wool trousers, starched shirts and shirt garters. If you don’t know what a shirt garter is, consider yourself lucky. It is a piece of elastic that connects your uniform shirt to your socks and must have been invented by a Nazi party fashionista.

I knew I wouldn’t be able to pay attention to some round-bellied politician pontificating for over an hour in the heat, so I decided to take a Nintendo Gameboy to graduation with me. Since there is nowhere to carry a Gameboy in dress uniform, I built a neck strap out of shoelaces and paperclips to carry it. Not very pretty, but it worked. And when the speaker got up to give his keynote, I popped open Yoshi World and went to a happier place for a while. If you’ve ever played Yoshi World, you know it’s a terrible game. But it was better than my reality right then and there.

By all measures, video games have ruled the entertainment world for the last 20 years.

- In 2009, Black Ops grossed 300% more than Toy Story 3

- In 2010, Avatar and Modern Warfare 2 shared the same opening week and Modern Warfare grossed 200% more than ticket sales for Avatar

- In 2012, when the first Avengers move came out, the sequel to Black Ops outsold the blockbuster by $403m

But why? Why are these games so popular, and more importantly, how can we learn from their success?

The answers can be found in 1988.

The top 2 video games in 1988 belonged to one system – Nintendo. Nintendo dominated the market and its highest selling games were sequels of previous hit games: Megaman 2 and Super Mario Bros. 3. Emerging companies like Sega and Namco were trying hard to break into Nintendo’s market. They created copycats of popular Nintendo games, merged with video game producers that previously partnered with Nintendo, and otherwise worked to block existing partners from reaching Nintendo. That was the way the world worked: copy the success of others, starve the competition, compete for a limited share.

Nintendo saw the hostility of the market and decided to explore a new idea; a new game that would break every rule in the video game world. At the time, it was believed that games had to be linear – built on a set storyline where memorized patterns and repetitive practice would allow everyone to beat the game. Anyone who has played Mario Bros., Tomb Raider or Metal Gear Solid knows what linear game-play feels like. Linear games were the rage and video-game publishers wanted to be in the game, so they did whatever it took to be players.

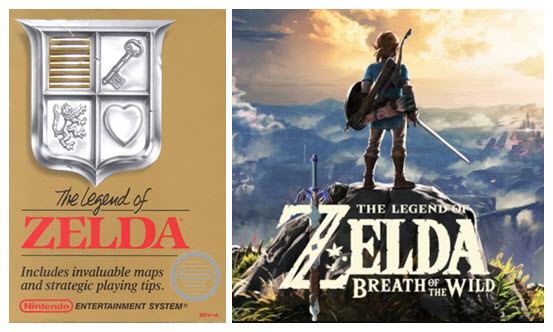

Amid all the infighting and conflict, Nintendo released their special project – the Legend of Zelda. Zelda was the first non-linear game ever produced and to this day is considered by gaming experts to be “The greatest, most influential game of all time.”

Zelda allowed players to explore an open world. The play was non-linear, meaning every individual player had a different experience. It was the first game where players could choose how to equip their character, save their progress, and complete side-quests in addition to the primary story. This variety allowed infinite options for gamers Every time you played the experience was unique. Where other games forced you to follow a set path, Zelda allowed you to write your own story. The legend was your own.

Video games are a powerful lens from which to consider life. Many people see life as a linear game; a predictable series of events that must be completed in a certain order before you can move to the next level. And even though we know the pattern and have seen others complete the story, we are not compelled to pay attention. So instead, we turn to video games. We turn to a non-linear world where anything is possible. But there is a secret out there that nobody talks about – a game cheat that very few realize and even fewer use: Our lives can be non-linear. We can be anything we want to be. We can build our own legend.

The world we live in today is not much different from that of 1988. Businesses are copying one another and mergers outnumber innovations, fighting for a limited share. We see new examples every day: Snapchat stories become Instragram stories; Instragram Live becomes Facebook Live; Uber begets Lyft begets Gett, Juno, and a host of other rideshare apps. The game is linear – predictable, repetitive and boring. The world needs people who are willing to change the game.

I hope I don’t disappointment anyone when I say, “video games can teach us.” They teach us determination, focus, commitment. They teach us how to struggle with frustration, how to collaborate with teammates, how to persevere and overcome. Parents, I encourage you to sit next your resident gamer and see how they rise to the challenge in a non-linear virtual world. See the confidence, intelligence and problem solving skills you instilled in them come alive on the screen. You will be awe-struck if you let yourself watch. The minds that can master these games are the minds that can change our world.

You men and women are a living legacy for your families. You represent a generation of college-bound students with the opportunity to shape history. University life, like all of life, can be linear or non-linear. You can do what others have done before you and compete for a limited share, or you can opt for a different adventure, challenge yourself, and create something incredible.

We live in an open world; a world where you can choose your equipment, save your progress, find allies and fight evil. Side-quests are everywhere and boss battles lie ahead. You deserve more than simple patterns and bonus lives. Recognize the infinite possibilities that lie before you. Don’t jump from goomba to goomba, hoping for fireballs, super mushrooms or invincibility stars. Instead, explore your world, discover your potential, and build your legend.